.webp)

Introduction

In today's digital age, with approximately 4.7 billion people worldwide actively using the internet, an astounding 1.7MB of data is generated every second. This exponential growth in internet usage presents a vast opportunity for breakthroughs in data science. Web scraping, especially when integrated with machine learning, emerges as a powerful tool for extracting valuable insights from this colossal volume of data. Data scientists can harness web scraping for machine learning to enable real-time analytics, train predictive models, and enhance natural language processing capabilities.

Our blog delves into every facet of web scraping for machine learning, offering insights into how it operates, its significance, diverse use cases, and best practices. By leveraging web scraping services, data scientists can extract relevant data for training models, leading to more accurate predictions and improved decision-making processes. The synergy between web data extraction and machine learning opens up possibilities, providing a foundation for advancements in predictive analytics and data-driven solutions. As the digital landscape continues to evolve, understanding and embracing the potential of web scraping for machine learning becomes increasingly crucial for staying at the forefront of data science innovations.

What Methods Do Data Scientists Employ for Data Collection?

Data scientists employ various methods to collect data, tailoring their approach based on the specific requirements of their research or analysis. One common strategy is to find existing datasets, which can be sourced from multiple avenues. Public datasets are benchmarks for accuracy in common computer science challenges like image recognition. Data scientists can also purchase datasets from marketplaces, encompassing diverse categories such as consumer behavior, environmental trends, or political insights. Additionally, leveraging a company's private datasets provides exclusive access to organizational data.

Creating a new dataset is another approach involving various techniques. Data scientists can generate data through human labor by designing surveys and collecting responses. Services like Amazon Turk facilitate payment for human tasks such as data labeling. Alternatively, transforming existing data into a dataset is achieved through web scraping. Web crawling, whether manual or facilitated by tools like web crawlers or web scrapers, extracts data from websites, contributing to creating comprehensive datasets.

In the context of machine learning, web scraping using machine learning algorithms further enhances data extraction capabilities. Web scraping services, equipped with machine learning integration, automate the collection of relevant data, ensuring efficiency and accuracy in training predictive models. This synergy between web data extraction and machine learning forms a potent combination for data scientists seeking robust and diverse datasets to drive their analytical endeavors.

Use Cases of Web Scraping Using Machine Learning

Web scraping in data science has become invaluable, providing diverse use cases that contribute to dataset collection, analysis, and enhancement. In particular, integrating web scraping with machine learning has opened up new avenues for extracting insights from the vast and dynamic content available on the internet.

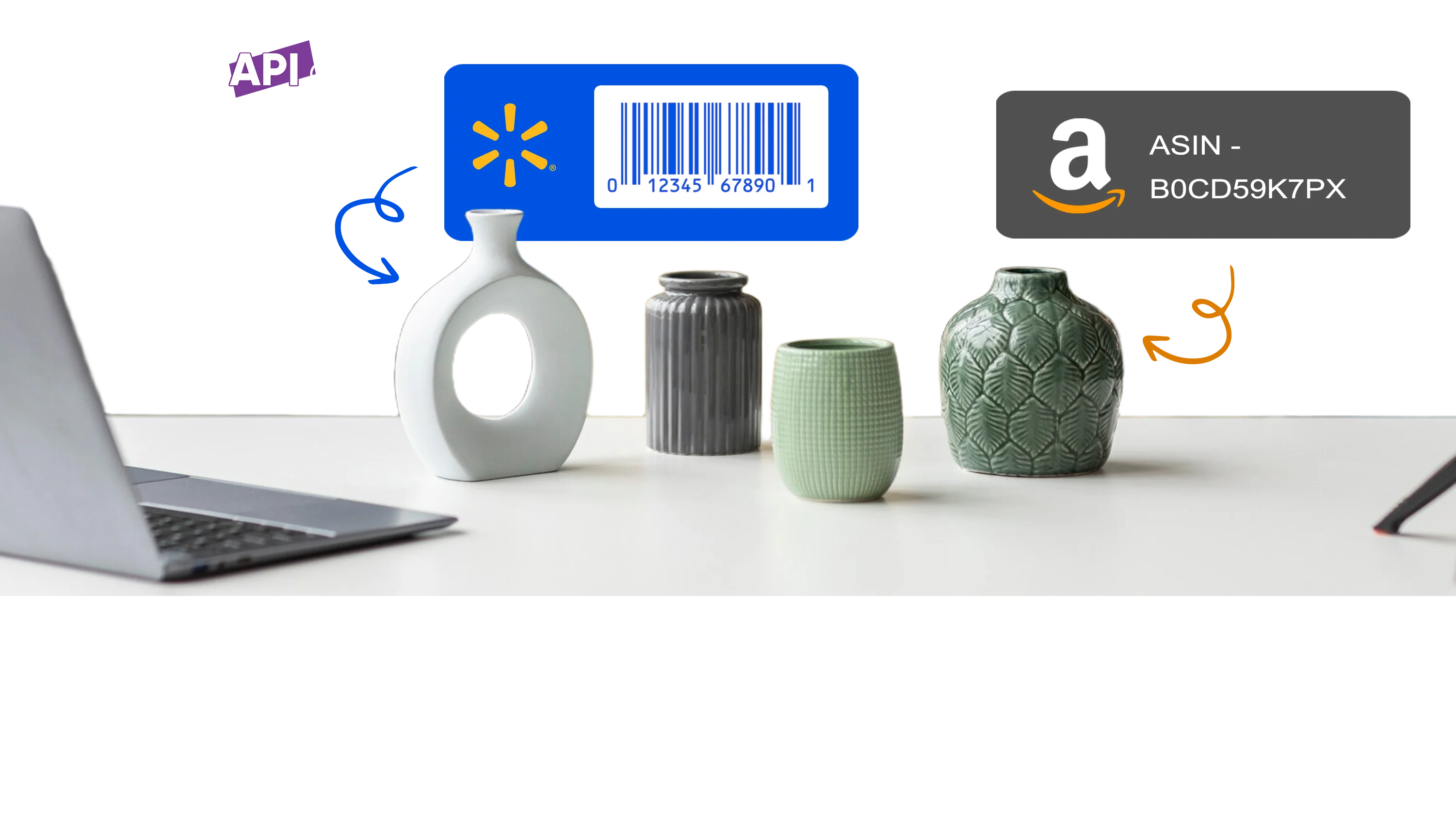

Market Research and Competitor Analysis

Web scraping facilitates extracting pricing data, product information, and customer reviews from e-commerce websites. Data scientists can utilize this information to conduct market research and gain insights into competitor strategies. Machine learning algorithms can then analyze the collected data to identify market trends, pricing patterns, and consumer sentiments.

Financial Data Analysis

Web scraping is widely employed to gather financial data from various sources, such as stock exchanges, financial news websites, and economic indicators. This data is crucial for predicting market trends, developing trading strategies, and making informed investment decisions. Machine learning models can process and analyze this financial data to enhance predictive analytics in the finance sector.

Social Media Sentiment Analysis

Web scraping helps extract social media content, comments, and user reviews. Data scientists can apply machine learning algorithms to perform sentiment analysis, gauging public opinions and sentiments about products, brands, or events. This information is valuable for businesses to understand customer feedback and tailor their strategies accordingly.

Job Market Insights

Web scraping can be applied to job portals and company websites to gather information about job postings, required skills, and industry trends. Machine learning models can then analyze this data to provide insights into the demand for specific skills in the job market, helping professionals and businesses make informed decisions.

Healthcare Data Collection

In healthcare, web scraping can gather information on medical research, clinical trials, and pharmaceutical developments. Machine learning algorithms can analyze this data to identify patterns, potential breakthroughs, and areas for further research.

Real Estate Market Analysis

Web scraping enables the extraction of real estate data, including property prices, location details, and housing trends. Machine learning models can analyze this data to provide insights into market fluctuations, predict property values, and assist buyers and sellers in making informed decisions.

Academic Research

Researchers can use web scraping to collect data from academic journals, conference proceedings, and research publications. Machine learning algorithms can then analyze this data to identify emerging trends, influential authors, and areas of research interest.

Weather Forecasting and Environmental Monitoring

Web scraping can be applied to gather weather data from various sources. Machine learning models can process this data to improve weather forecasting accuracy. Additionally, environmental data such as pollution levels and climate patterns can be extracted and analyzed for better environmental monitoring.

Web scraping enhances data science applications across various industries, especially when integrated with machine learning. By automating the extraction of relevant information from the web, data scientists can leverage diverse datasets to derive meaningful insights and drive informed decision-making processes.

Data Science Projects Showcasing the Power of Web Scraping: Real-world Examples

In data science, web scraping has emerged as a potent tool, facilitating the extraction of valuable insights from the expansive and dynamic internet landscape. Numerous real-world projects underscore the transformative impact of web scraping, particularly when integrated with machine learning, on advancing data science applications.

GPT-3 by OpenAI

An exemplary illustration is the development of GPT-3 (Generative Pre-trained Transformer) by OpenAI. GPT-3's remarkable capabilities in generating human-like text, including code for machine learning frameworks and completing human speech, are a testament to its training on web data. Sourced from diverse outlets such as Wikipedia and Common Crawl's web archive, GPT-3 showcases how web scraping, particularly when utilizing machine learning, contributes to constructing sophisticated language models with practical applications.

LaMDA by Google

Google's LaMDA (Language Model for Dialogue Applications) is another notable project where web scraping is pivotal. LaMDA stands out for its proficiency in engaging in open-ended conversations with users. Its distinctive training approach utilizes "dialogue" training sets acquired through web scraping from various internet resources. This methodology empowers LaMDA to generate free-flowing conversations, exemplifying the adaptability and responsiveness of web-scraped data to language models.

Similar Web for Business Insights

Similar web, an online platform delivering insights into website metrics like traffic and engagement, exemplifies the application of web scraping in the business realm. By aggregating data from diverse public sources, including Wikipedia, Census, and Google Analytics, Similar Web generates comprehensive reports crucial for businesses engaging in competition analysis and optimizing marketing strategies. This project showcases how web scraping services, integrated with machine learning, provide real-time and pertinent data for informed strategic decision-making.

These real-world instances highlight the manifold applications of web scraping in data science projects, emphasizing its instrumental role in harnessing the wealth of information accessible on the web. As data science evolves, the strategic integration of web scraping with machine learning remains pivotal for accessing, analyzing, and leveraging the internet's extensive datasets. Collectively, these projects stand as a testament to the transformative potential of web scraping in advancing the capabilities of data science across various domains.

What Difficulties are Associated with Web Scraping?

Web scraping, while a powerful and widely used technique, comes with challenges that data scientists and developers must navigate. These challenges are integral to the process and require careful consideration when undertaking web scraping projects, especially when integrated with machine learning. Here are some key challenges associated with web scraping:

Website Structure Changes

Websites are dynamic, and their structure can change over time due to updates or redesigns. This challenges web scraping as the previously defined patterns may become obsolete. Regular monitoring and adaptation of scraping scripts are necessary to accommodate changes in website structure.

Anti-Scraping Measures

Websites may implement anti-scraping measures to protect their data and prevent automated scraping. These measures include CAPTCHAs, IP blocking, and honeypot traps. Overcoming these obstacles requires developing sophisticated scraping techniques or utilizing web scraping services with advanced capabilities.

Legal and Ethical Concerns

Web scraping raises legal and ethical considerations, as scraping without permission may violate a website's terms of service. It's crucial to adhere to ethical guidelines, respect website policies, and ensure compliance with relevant laws to avoid legal consequences.

Data Quality and Reliability

Ensuring the quality and reliability of scraped data can be challenging. Websites may have inconsistencies, inaccuracies, or incomplete information. Machine learning models trained on such data may produce biased or unreliable results. Validation and cleansing processes are essential to enhance the reliability of the extracted data.

Rate Limiting and Throttling

Many websites implement rate-limiting and throttling mechanisms to control the frequency of requests from a single IP address. Exceeding these limits may result in IP blocking or temporary bans. Effectively managing request rates and incorporating delays in scraping processes is crucial to avoid detection and sanctions.

Dynamic Content Loading

Modern websites often use dynamic content-loading techniques through JavaScript. Traditional scraping methods may not capture dynamically generated content. Addressing this challenge requires advanced techniques or tools that can interact with JavaScript, ensuring comprehensive data extraction.

Dependency on Source Stability

Web scraping projects are inherently dependent on the stability of the data source. If the source website undergoes significant changes, it can disrupt the scraping process. This dependency underscores the importance of continuous monitoring and adaptation to ensure data continuity.

Navigating these challenges requires technical expertise, adaptability, and adherence to ethical practices. Employing web scraping services with machine learning capabilities can enhance the efficiency and reliability of the scraping process, addressing some of the challenges associated with dynamic websites and evolving data sources.

Web Scraping Using Machine Learning

Real Data API employs advanced Machine Learning algorithms to elevate the web scraping process. By integrating Machine Learning into web scraping services, Actowiz ensures a sophisticated and adaptive approach to extracting data from the ever-evolving landscape of the internet. This synergy enhances accuracy, efficiency, and flexibility in handling diverse web content.

Key Features and Benefits

Precision in Data Extraction:

Machine Learning algorithms enable Actowiz to tailor scraping methodologies based on each website's specific structure and content. This precision ensures the accurate extraction of relevant data, even from complex and dynamic web pages.

Adaptability to Website Changes:

Real Data API understands the dynamic nature of websites, where structures can change over time. By incorporating Machine Learning, the web scraping services offered can adapt to website lay layout modifications, ensuring continued data extraction effectiveness.

Efficient Handling of Anti-Scraping Measures:

Websites implement anti-scraping measures to protect their data. Actowiz, utilizing Machine Learning, develops strategies to overcome challenges such as CAPTCHAs, IP blocking, and other anti-scraping mechanisms, ensuring a smooth and uninterrupted scraping process.

Data Quality Assurance:

Machine Learning models integrated into the scraping process enhance data quality and reliability. Actowiz employs validation techniques to ensure that the scraped data is accurate, consistent, and free from inconsistencies.

Customized Solutions for Machine Learning Integration:

Real Data API recognizes the uniqueness of each web scraping project. By offering customized solutions integrating Machine Learning according to project requirements, Actowiz ensures optimal performance and results tailored to specific business needs.

Conclusion

Real Data API is a frontrunner in web scraping using Machine Learning. With a commitment to precision, adaptability, and data quality, Actowiz empowers businesses to glean actionable insights from the vast expanse of online data. Whether navigating complex website structures or addressing evolving anti-scraping measures, Real Data API delivers innovative and effective web scraping services driven by the capabilities of Machine Learning. For more details, contact Real Data API now!