.webp)

Introduction

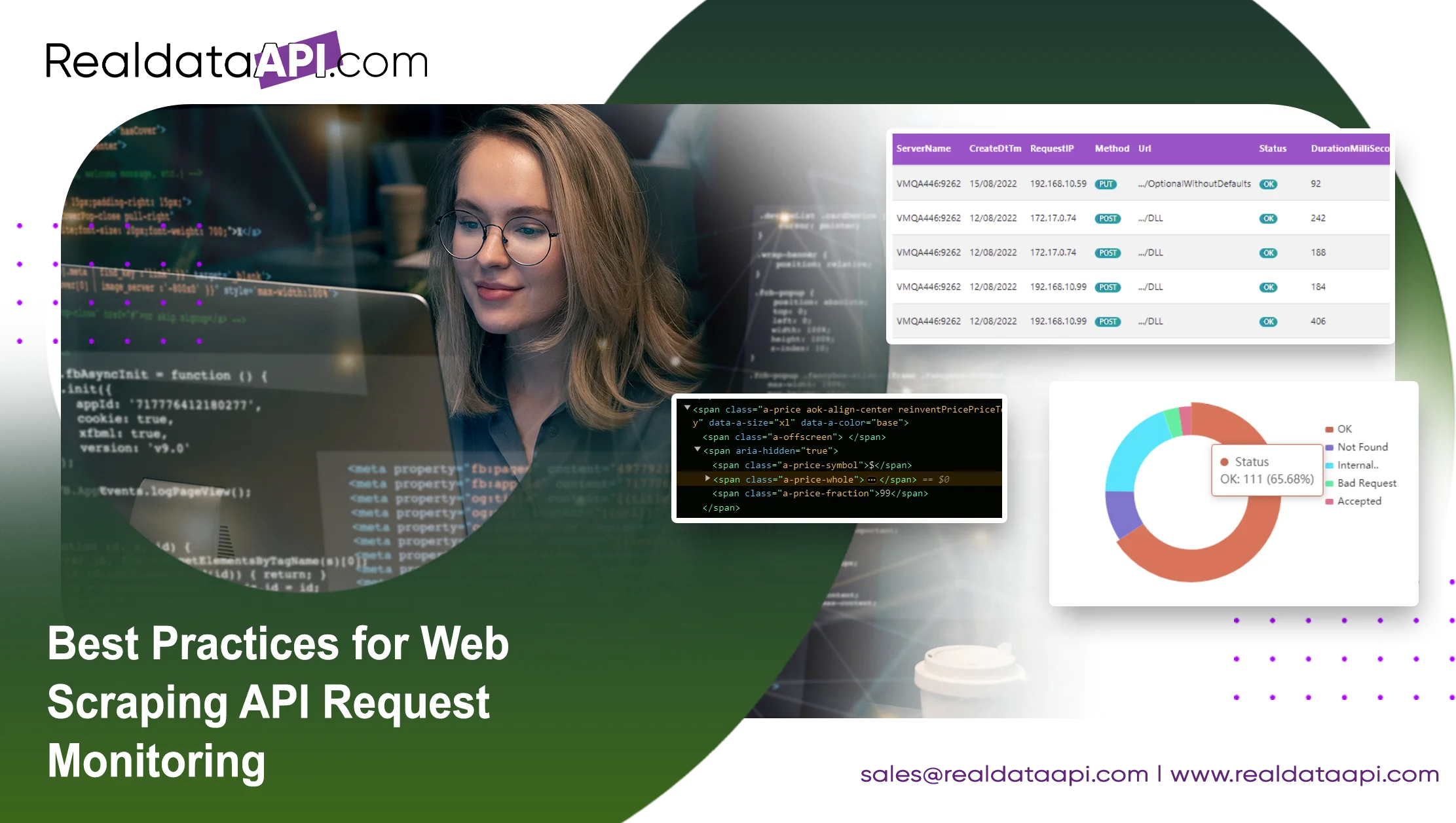

Web scraping has become an indispensable tool for extracting structured data from websites, allowing businesses to gather insights, track trends, and analyze competitors. A key component of many modern web scraping strategies is using APIs (Application Programming Interfaces). APIs provide a more structured and reliable way to fetch data than traditional HTML parsing. However, managing and monitoring these API requests is crucial to ensure seamless, reliable, and efficient data scraping, especially when dealing with Scrape App data APIs.

This detailed blog will explore the best practices for Web Scraping API Request Monitoring, touching on key aspects like API data rendering, API response analysis, API request validation, and more.

1. Understanding the Importance of API Request Monitoring

Before diving into best practices, it’s crucial to understand why API request monitoring is essential for any web scraping operation. Monitoring allows you to:

- Ensure the availability and reliability of the data fetching API.

- Detect and resolve errors early, preventing disruptions in the scraping pipeline.

- Track the performance and speed of backend API calls.

- Comply with rate limits and prevent overloading the target servers.

- Proper API request monitoring ensures smooth operation and safeguards the long-term sustainability of your scraping system.

2. Efficient Logging and Tracking of API Requests

One of the core aspects of API request monitoring is keeping track of every API call. A robust logging system ensures you can audit and troubleshoot API requests when issues arise. Here’s what you should track:

Timestamp: When the API request was made.

Response Time: How long did it took for the API to respond.

Status Codes: The HTTP response codes (e.g., 200 for success, 404 for not found, 500 for server error).

Rate Limits: The API returns any rate limit headers to avoid exceeding request limits.

This API request log analysis provides valuable data for analyzing performance and errors. It also helps you identify patterns like slow response times or frequent failures.

3. API Response and Data Rendering Analysis

After an API request is made, it is essential to ensure that the API data rendering process works correctly. This means checking that the fetched data is presented as expected and that no data is missing or corrupted.

Check API Data Rendering:

- Confirm that the API response matches the expected data structure (JSON, XML, etc.).

- Use schema validation tools to compare the response against the expected data format.

- Monitor changes in API response structure, which might indicate a change in the API itself.

- Performing regular API response analysis ensures that your scraper always retrieves accurate and usable data and helps you avoid issues arising from API changes.

4. API Request Validation

API request validation ensures that the data sent in requests is correct and adheres to the expected format. This is especially important when working with data-fetching APIs that require parameters like authentication tokens, query strings, or body payloads.

Here are a few best practices for API request validation:

- Parameter Validation: Ensure all required parameters are included, and their values are valid.

- Authentication: Ensure authentication headers (API keys, OAuth tokens) are present and valid.

- Data Sanitization: Clean and format input data to prevent sending malformed requests.

Validating API requests upfront helps prevent errors like 401 Unauthorized or 400 Bad Request responses, which could break the data extraction process.

5. Error Handling and Retries

Errors are inevitable when scraping data via APIs. However, how you handle these errors can make a significant difference. Implementing a proper error-handling mechanism that logs errors and retries failed requests is crucial. Here’s what you should consider:

- HTTP Status Code Handling: Handle common errors like 404 Not Found, 500 Internal Server Error, and 429 Too Many Requests with appropriate logic.

- Retry Logic: Set up automatic retries with exponential backoff in case of temporary failures.

- Graceful Failures: Failures: Ensure the system fails gracefully, logging errors for analysis without disrupting the entire scraping process.

By incorporating robust error handling, you can minimize the risk of data loss and ensure that your scraper continues to operate even when issues occur.

6. Rate Limiting and API Throttling

Respecting rate limits is critical to scraping APIs without getting blocked. Most APIs limit the number of requests made within a specific time frame. Exceeding these limits can lead to temporary or permanent bans from the API service.

To prevent this, it’s essential to monitor API performance tracking with a focus on:

- Request Frequency: Adjust the frequency of your API calls to stay within the allowed rate limits.

- Throttling Mechanism: Implement throttling to delay API requests when approaching rate limits in your scraper.

- Backoff Strategy: In the case of HTTP 429 Too Many Requests, employ an exponential backoff to slow down requests and avoid further rate limiting.

These strategies help you avoid being blocked and ensure a smooth and continuous scraping process.

7. Real-Time API Monitoring and Alerts

Real-time monitoring is crucial for ensuring your scraper is always functioning correctly. Setting up real-time API analysis tools lets you get immediate notifications when something goes wrong. Consider the following practices:

- Automated Alerts: Use monitoring tools to send alerts when response times spike, errors occur frequently, or rate limits are approached.

- Performance Dashboards: Implement dashboards to visualize API performance metrics, including response times, request frequency, and error rates.

- Health Checks: Periodically send simple API requests to check the service's availability and responsiveness.

Real-time API request monitoring provides you with actionable insights, allowing you to quickly address issues before they impact your web scraping pipeline.

8. Backend API Calls and API Performance Tracking

When scraping data, it is essential to track the performance of both frontend and backend API calls. Backend API calls often involve more complex data fetching and processing, making performance tracking essential for optimizing your scraper’s efficiency.

Key Metrics to Track:

- Latency: Measure how long it takes to complete an API request from start to finish.

- Concurrency: Monitor how many requests are being handled simultaneously, as too many concurrent requests can degrade performance.

- Success Rates: Track the percentage of successful requests versus failed ones.

You can identify bottlenecks and optimize your scraper for better performance by actively monitoring these metrics.

9. API Testing Tools

Testing your scraping tool against different APIs is critical to maintaining a reliable operation. Several API testing tools are available to help validate API requests and responses before integrating them into your scraping tool.

Recommended API Testing Tools:

- Postman: A popular tool for making, testing, and validating API requests.

- Insomnia: Another great API testing tool for sending requests and monitoring responses.

- Swagger: This tool helps with API documentation and testing, which is especially useful if you work with publicly available APIs.

These tools help identify potential issues and fine-tune your scraper for maximum efficiency.

10. Analyzing the Data Flow

Analyzing the API data flow is crucial for optimizing data movement through your system. Understanding the journey from request to response and then through data parsing and storage helps you identify areas for improvement.

API Data Flow Analysis:

- Data Validation: Check that the API returns valid data before processing it.

- Data Transformation: Ensure the fetched data is adequately transformed into the required format.

- Data Storage: Optimize how the scraped data is stored for further analysis.

API data fetching should be smooth and efficient, ensuring minimal data processing and analysis delays.

Conclusion

Web Scraping API Request Monitoring is critical to building and maintaining a robust data extraction system. By following these best practices—like monitoring performance, ensuring proper error handling, and leveraging the right tools—you can ensure your API-based scrapers are efficient and reliable. From API request log analysis to real-time API monitoring, every aspect of API scraping requires attention to detail to ensure long-term success.

Ultimately, successful API request monitoring optimizes performance and enhances the quality and reliability of the data you gather, empowering your business with actionable insights. Focusing on key areas like API response analysis, API request validation, and API performance tracking can streamline your web scraping processes, reduce errors, and ensure compliance with the latest data protection and API usage guidelines.

Real Data API is your trusted partner in delivering expert web scraping services with robust API monitoring. Contact us today to optimize your data extraction process and unlock valuable insights!