Introduction

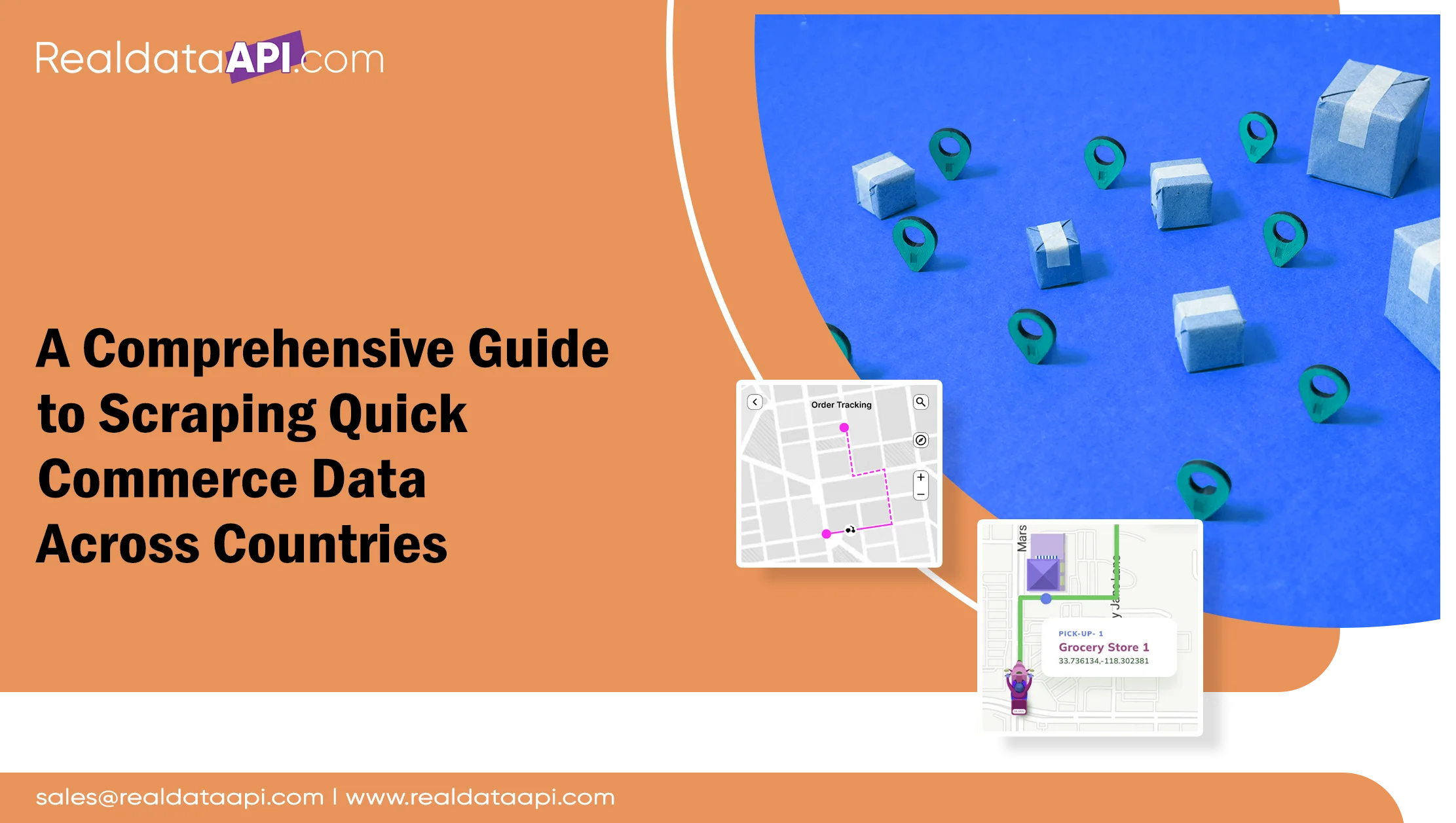

The emergence of quick commerce has transformed consumer shopping experiences, offering unmatched convenience and rapid delivery. In this fast-paced and highly competitive market, businesses must stay ahead by leveraging data effectively. This is where web scraping techniques become invaluable. In this blog, we explore the fundamentals of efficiently extracting quick commerce data across multiple countries, highlighting best practices, essential tools, and APIs to streamline the process and maximize results.

Why Scrape Quick Commerce Data?

Quick commerce platforms like Instacart, Deliveroo, and Zomato have revolutionized the way consumers shop and dine. Operating across diverse regions, these platforms cater to varying consumer behaviors, product preferences, and pricing models. Extracting data from these platforms offers businesses an invaluable opportunity to gain insights and refine their strategies.

Analyze Market Trends

Understanding consumer behavior is crucial for staying relevant in any market. By extracting data on popular products, delivery times, and customer feedback, businesses can identify trends specific to each region. This information helps businesses adapt their offerings to meet local demands and enhance customer satisfaction.

Competitive Analysis

Web scraping allows businesses to monitor competitors’ pricing strategies, inventory levels, and promotional campaigns. This enables the creation of competitive pricing models and targeted marketing strategies to attract and retain customers.

Optimize Offerings

Diverse consumer preferences require tailored products and services. By analyzing data on product categories and regional demand, businesses can refine their offerings to resonate with local markets. This personalization leads to better customer engagement and increased loyalty.

Forecast Demand

Data extraction facilitates predictive analytics, enabling businesses to anticipate demand fluctuations and optimize their supply chains. This reduces the risk of overstocking or understocking and ensures a seamless customer experience.

Maximizing Potential with Web Scraping

Web scraping is a powerful tool that provides real-time, structured data from quick commerce platforms. By leveraging this data, businesses can make informed decisions, refine their growth strategies, and remain competitive in a rapidly evolving market. From market analysis to operational optimization, extracting quick commerce data is the key to unlocking success in the modern retail landscape.

Challenges in Scraping Quick Commerce Data Globally

Scraping quick commerce data across multiple countries isn’t without its challenges. Some common issues include:

Localized Data Formats:

Different countries have unique data structures and formats.

Language Barriers:

Multilingual platforms may require language-specific parsers.

Dynamic Content:

Many platforms use JavaScript to load content dynamically, complicating data extraction.

Legal Considerations:

Scraping regulations vary by country, necessitating compliance with local laws.

Anti-Scraping Mechanisms:

Platforms often deploy measures like CAPTCHAs and IP blocking to deter automated bots.

To navigate these challenges, a robust strategy is essential.

Best Practices for Web Scraping Quick Commerce Data

1. Use Reliable Tools and APIs

Selecting the right tools and APIs for quick commerce data scraping is crucial. Popular options include:

BeautifulSoup and Scrapy:

Python-based libraries for building custom scraping solutions.

Quick Commerce Data Scraping Tools:

Real Data API offers user-friendly interfaces for extracting data without coding.

Quick Commerce Scraping API:

APIs like SerpApi and Apify provide pre-configured solutions tailored to specific platforms.

2. Implement Proxy Management

Avoid detection and IP bans by using rotating proxies. Tools like Bright Data or Smartproxy enable seamless scraping by masking your IP address and distributing requests.

3. Handle Localization

Account for regional differences in:

Currencies:

Ensure data is converted to a standard currency for comparison.

Measurement Units:

Adapt to local units of measurement.

Language:

Use language detection and translation tools like Google Translate API when necessary.

4. Automate Data Collection

Automate scraping tasks to improve efficiency and scalability. Schedule regular scrapes using tools like Cron jobs or Task Scheduler to ensure up-to-date data.

5. Ensure Compliance

Adhere to legal and ethical guidelines by:

- Respecting website terms of service.

- Avoiding sensitive or personal data.

- Following country-specific data privacy regulations, such as GDPR or CCPA.

Step-by-Step Guide to Scraping Quick Commerce Data

Step 1: Define Your Objectives

Clearly outline what data you need and why. For instance:

- Product pricing and availability.

- Delivery times and charges.

- Customer reviews and ratings.

Step 2: Choose the Right Tools

Select tools that match your requirements. For large-scale projects, consider using a quick commerce scraping API for efficient data retrieval.

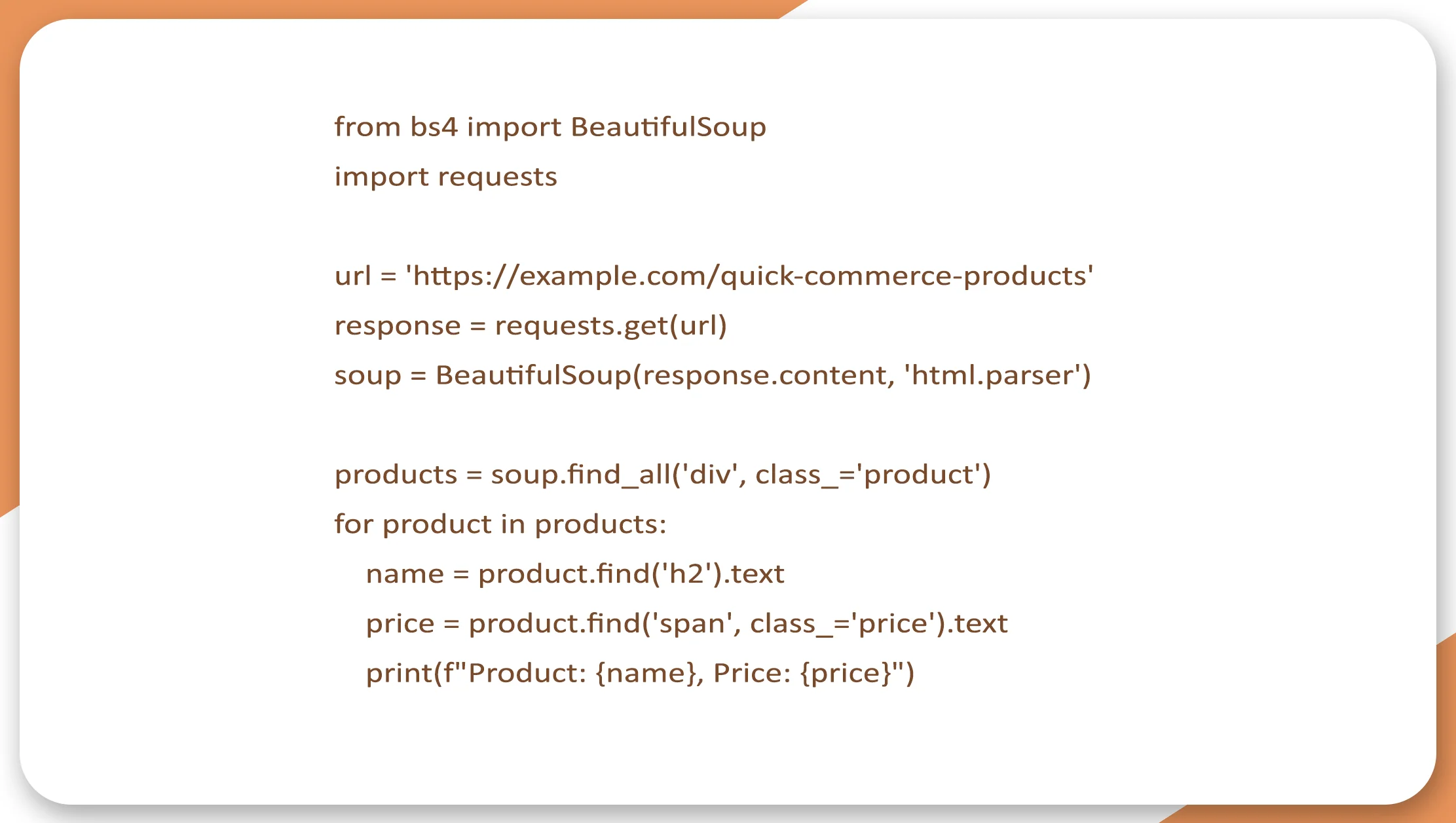

Step 3: Develop a Scraping Script

Create a script using tools like Python with libraries such as BeautifulSoup or Selenium for dynamic content. Example:

Step 4: Use Proxies and User Agents

Rotate IP addresses and user agents to mimic organic browsing behavior, reducing the likelihood of being flagged.

Step 5: Parse and Store Data

Store the extracted data in a structured format such as CSV or JSON for further analysis.

Step 6: Analyze Data

Use analytical tools like Tableau or Power BI to gain insights from the scraped data.

Leveraging Quick Commerce Data Scraping Tools and APIs

Quick commerce, characterized by ultra-fast delivery services, has revolutionized the retail and grocery sectors. To thrive in this competitive landscape, businesses can leverage data scraping tools and APIs to extract actionable insights from various platforms, enabling smarter decision-making and operational efficiency.

Real-Time Pricing and Product Monitoring

Quick commerce operates in a fast-paced environment where prices and product availability change frequently. Data scraping tools and APIs provide real-time access to this information, allowing businesses to monitor competitor pricing, stock levels, and discounts. This ensures that they remain competitive and responsive to market demands.

Optimizing Inventory Management

By analyzing data on popular products, seasonal trends, and customer preferences, businesses can optimize their inventory. Quick commerce data scraping tools help identify high-demand items and predict future trends, reducing the risk of overstocking or understocking.

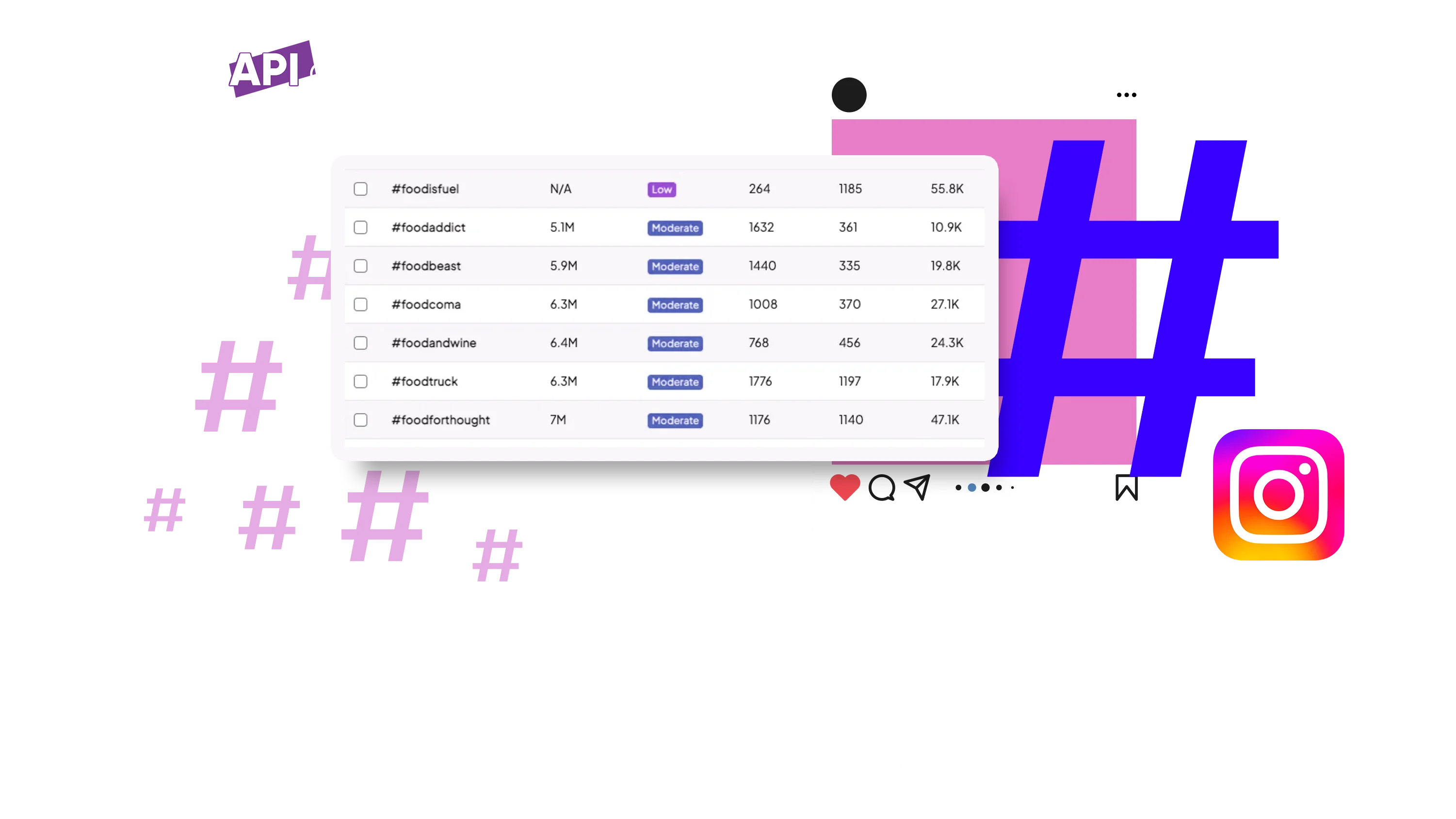

Enhancing Customer Insights

Extracting customer reviews, ratings, and feedback from quick commerce platforms offers valuable insights into consumer preferences and pain points. This data can be used to improve product offerings and create targeted marketing strategies that resonate with customers.

Improving Delivery and Logistics

Quick commerce relies heavily on efficient delivery. Scraping data related to delivery times, service areas, and logistics performance helps businesses streamline operations, identify bottlenecks, and enhance service quality.

Personalized Marketing Strategies

APIs enable businesses to gather data on customer behavior, such as browsing patterns and purchase histories. This information can be used to design personalized promotions, boosting customer engagement and retention.

Quick commerce data scraping tools and APIs empower businesses to make data-driven decisions, adapt to market dynamics, and deliver exceptional value to customers. Leveraging these technologies ensures a competitive edge in the rapidly evolving quick commerce sector.

Scaling Quick Commerce Data Scraping Across Multiple Countries

To scale your operations globally:

1. Set Up Regional Servers:

Deploy servers in target countries to reduce latency and avoid geo-restrictions.

2. Use Cloud-Based Scraping Services:

Platforms like AWS Lambda or Google Cloud Functions enable scalable data scraping solutions.

3. Optimize for Local Compliance:

Work with legal advisors to ensure your scraping activities align with local regulations.

Ethical Considerations in Quick Commerce Data Scraping

While scraping offers significant benefits, it’s essential to maintain ethical standards. Always:

- Attribute data to its source.

- Avoid scraping sensitive information.

- Respect website owners’ requests and terms of service.

Conclusion

Scraping quick commerce data across multiple countries is a powerful strategy for businesses seeking a competitive edge in today’s fast-paced market. By leveraging the right tools, APIs, and techniques, you can overcome challenges and extract actionable insights efficiently. Remember to follow ethical practices and legal guidelines to ensure a seamless and responsible data scraping process. Whether you’re a seasoned developer or a newcomer to web scraping, the strategies outlined in this blog will help you unlock the full potential of quick commerce data. For more details, contact Real Data API now!

.webp)

.webp)