Introduction

In the age of data-driven decision-making, e-commerce data plays a crucial role in market analysis, competitor research, and pricing strategies. BigBasket Data Scraping Using Python allows businesses and individuals to gather valuable insights by extracting product details, pricing, and availability from BigBasket's website. This guide will walk you through how to scrape data from BigBasket with Python, covering the necessary tools, techniques, and best practices.

What is Web Scraping?

Web scraping is the process of extracting data from websites using automation. It allows users to collect large amounts of information quickly and efficiently. With BigBasket Data Scraping using Python, businesses and developers can retrieve real-time product details, prices, stock availability, and other essential data from BigBasket’s website.

Python is a popular choice for web scraping, thanks to its powerful libraries like BeautifulSoup, Scrapy, and Selenium. These tools allow users to scrape data from BigBasket with Python and save it in structured formats such as CSV, JSON, or databases for easy analysis and insights.

By implementing BigBasket data scraping, businesses can gain valuable insights into market trends, competitive pricing, and inventory management. However, it is essential to comply with BigBasket’s terms of service and respect ethical scraping practices.

If you are looking to extract data from BigBasket with Python, you can leverage web scraping techniques to automate data collection and improve decision-making in e-commerce and retail sectors.

Why Scrape BigBasket Data?

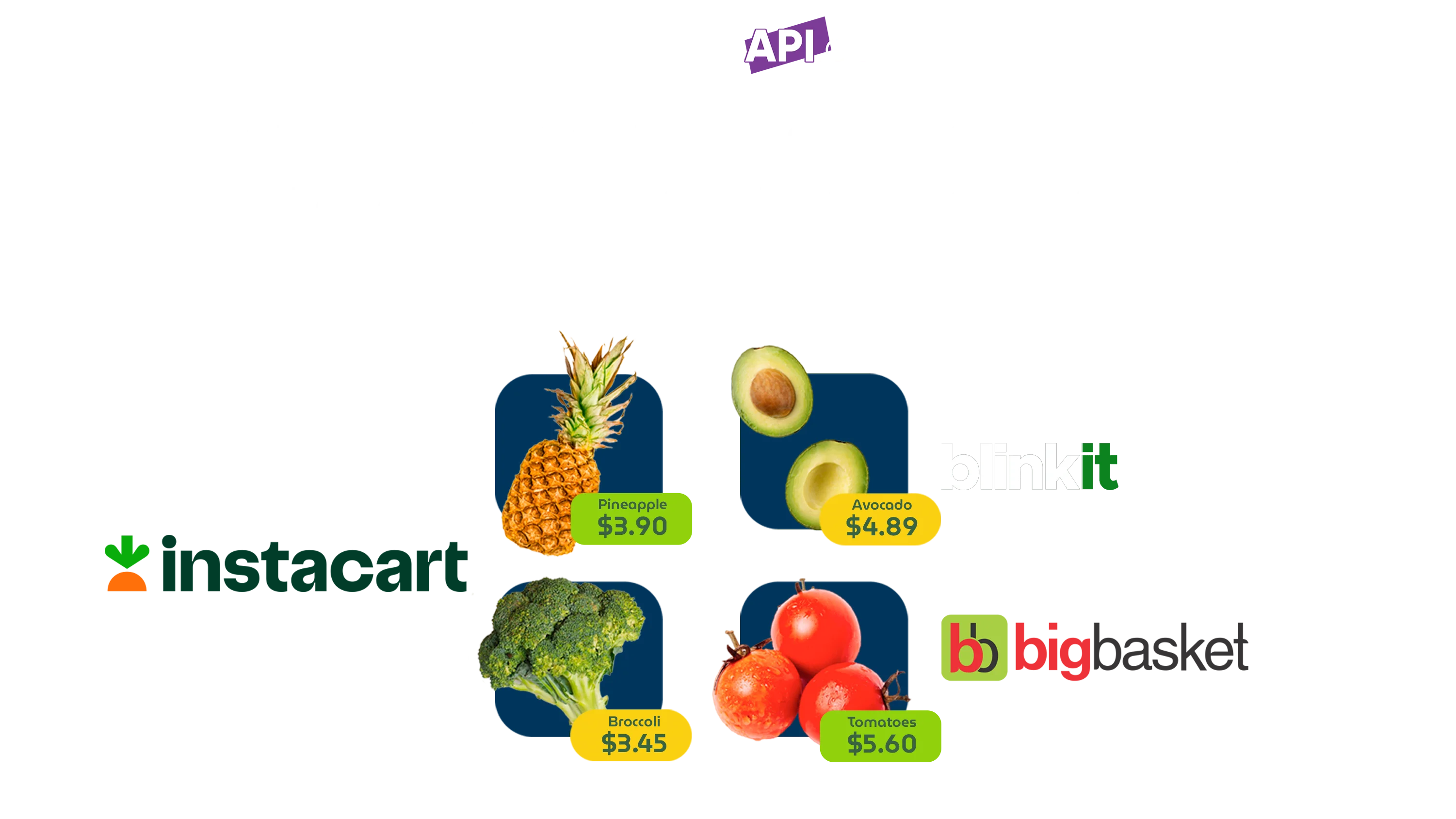

Big Basket Data Scraping is essential for businesses, researchers, and developers who need real-time grocery and e-commerce data. By using a BigBasket Scraper, users can extract valuable insights to enhance decision-making and optimize operations. Here are some key reasons to extract data from BigBasket with Python:

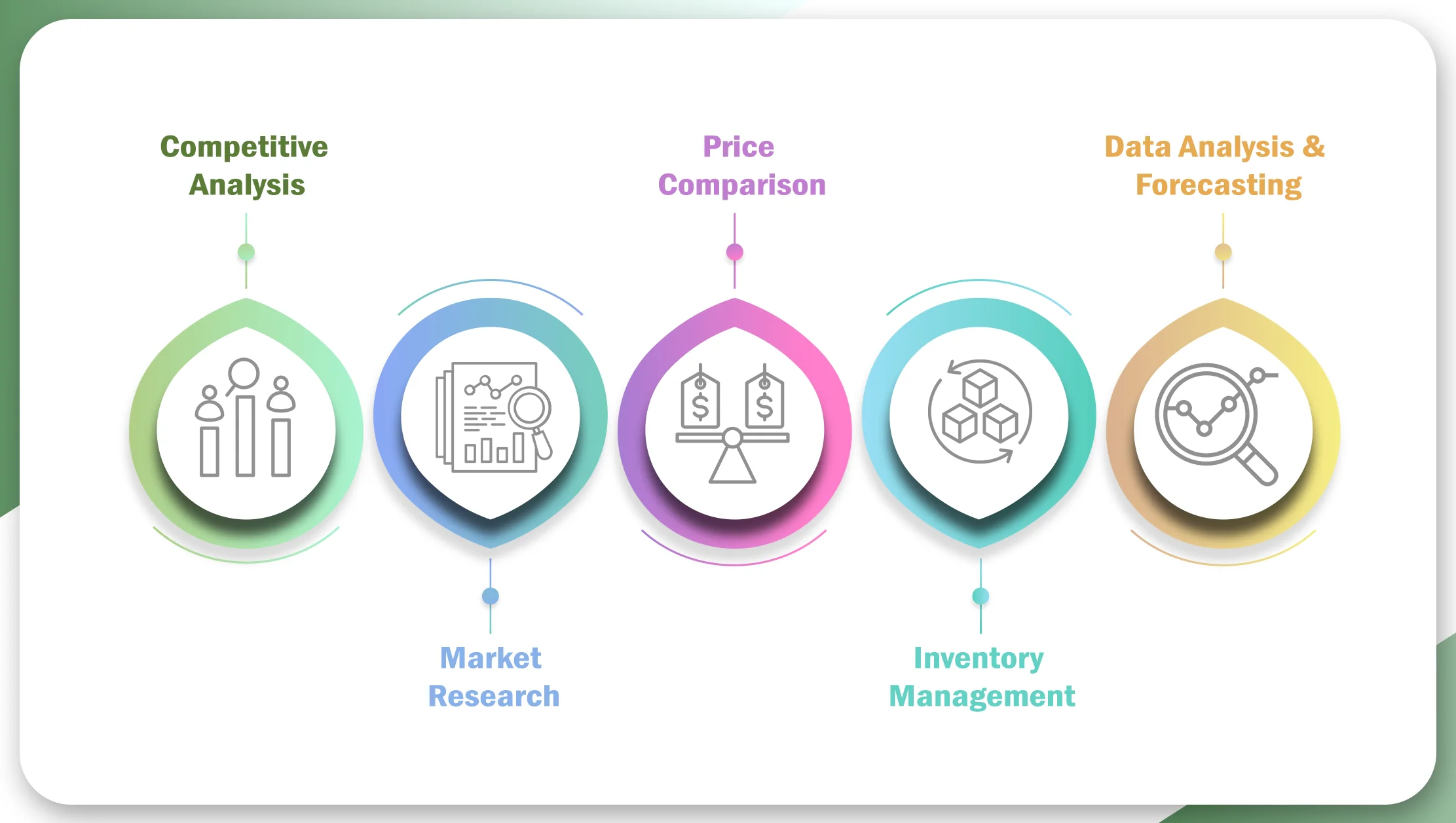

1. Competitive Analysis

To stay ahead in the market, businesses need to track competitor pricing, product availability, and discounts. Big Basket Data Scraping allows retailers and analysts to gather this information and adjust their strategies accordingly.

2. Market Research

By collecting data on trending products, seasonal demands, and customer preferences, companies can gain insights into grocery and e-commerce industry trends. This helps in launching new products or improving existing ones.

3. Price Comparison

Many businesses and consumers compare prices across different e-commerce platforms before making a purchase. With a BigBasket Scraper, you can automate price tracking and ensure competitive pricing strategies.

4. Inventory Management

Retailers and suppliers need to track product availability and stock levels. Extracting data from BigBasket with Python helps businesses monitor stock changes and prevent supply chain disruptions.

5. Data Analysis & Forecasting

By analyzing historical data, businesses can predict future trends, customer demands, and pricing patterns. Big Basket Data Scraping enables data-driven decision-making for better sales forecasting and inventory planning.

Using a BigBasket Scraper, businesses can automate data collection, optimize their operations, and gain a competitive edge in the e-commerce industry. However, it’s important to follow ethical web scraping practices and comply with BigBasket’s terms of service.

Tools Required for BigBasket Scraping

Before diving into BigBasket Data Scraping Using Python, install the following libraries:

pip install requests beautifulsoup4 pandas- Requests – Fetches the webpage content.

- BeautifulSoup – Parses HTML data.

- Pandas – Stores and processes extracted data.

Steps to Scrape Data from BigBasket with Python

1. Import Required Libraries

import requests

from bs4 import BeautifulSoup

import pandas as pd

2. Define the BigBasket URL

Choose a product category page or a specific product URL from BigBasket.

url = "https://www.bigbasket.com/pc/fruits-vegetables/" # Example URL3. Send a Request to the Website

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

response = requests.get(url, headers=headers)4. Parse the HTML Content

soup = BeautifulSoup(response.content, "html.parser")5. Extract Product Information

products = []

for item in soup.find_all("div", class_="col-xs-12 col-sm-12 col-md-6 col-lg-4"):

product_name = item.find("div", class_="product-name").text.strip()

price = item.find("span", class_="discnt-price").text.strip()

products.append({"Product Name": product_name, "Price": price})6. Store Data in a DataFrame

df = pd.DataFrame(products)

print(df.head())7. Save Data to CSV

df.to_csv("bigbasket_data.csv", index=False)Handling Anti-Scraping Measures

BigBasket, like many e-commerce platforms, implements security measures to prevent automated scraping. Here are some techniques to bypass these measures:

- Use Rotating Proxies – Services like ScraperAPI or BrightData help bypass IP bans.

- Implement Delays & Randomization – Avoid sending requests too frequently.

- Use Selenium for Dynamic Content – Some data may load via JavaScript, requiring Selenium for scraping.

Using Selenium for Dynamic Content

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

options = webdriver.ChromeOptions()

options.add_argument("--headless") # Run in headless mode

browser = webdriver.Chrome(service=Service(ChromeDriverManager().install()), options=options)

browser.get(url)

soup = BeautifulSoup(browser.page_source, "html.parser")Ethical Considerations and Legal Aspects

Before performing BigBasket Scraper operations, ensure compliance with BigBasket’s terms of service. Always:

- Check the website’s robots.txt file.

- Avoid overloading the server with frequent requests.

- Use data responsibly and ethically.

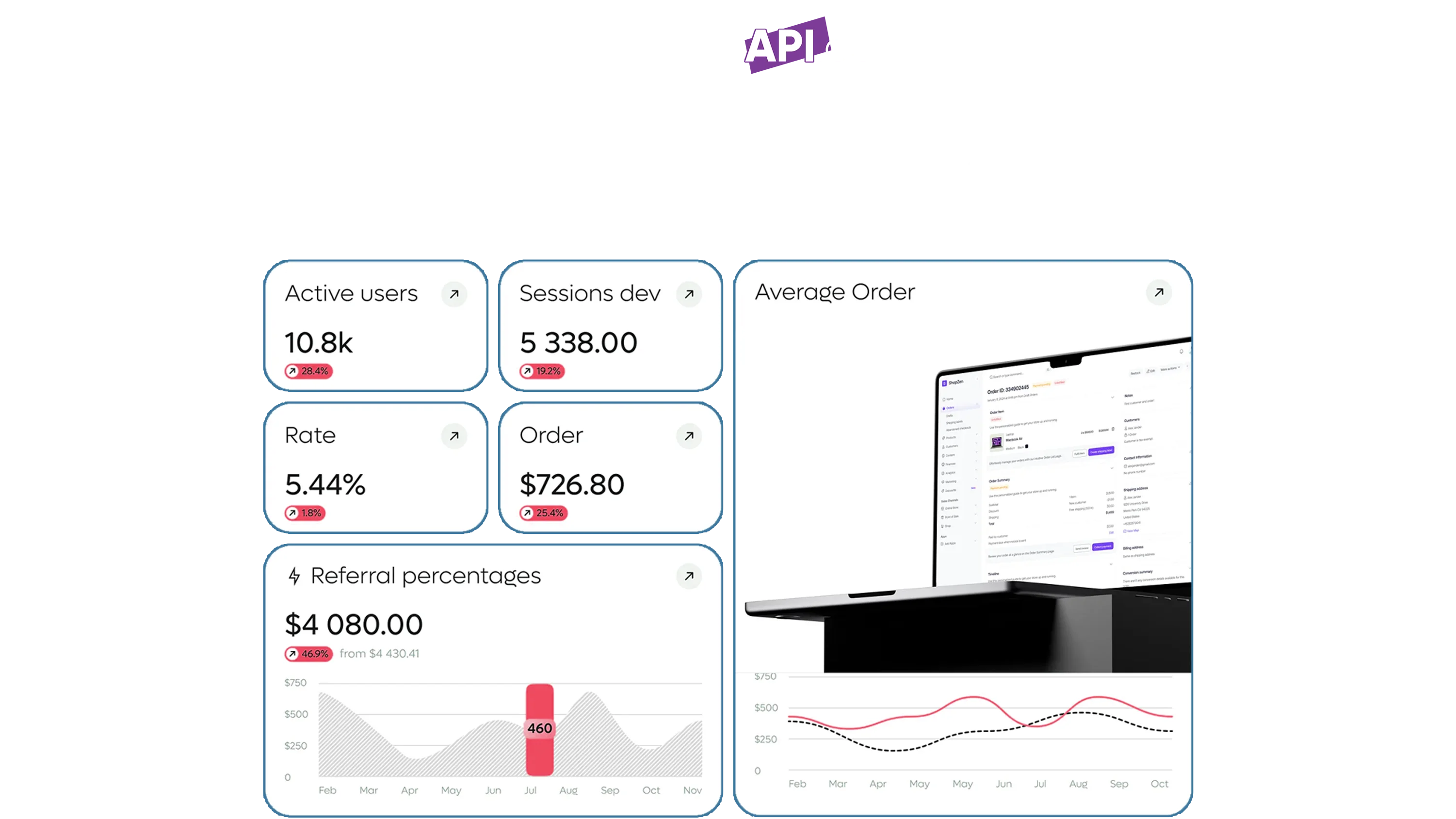

Why Choose Real Data API?

If you need a hassle-free solution for Big Basket Data Scraping, Real Data API provides a reliable and scalable approach. Here’s why you should consider it:

- No IP Bans – The API handles proxy rotation automatically.

- Real-Time Data – Get up-to-date product details without delays.

- Easy Integration – Simple REST API that integrates with Python and other platforms.

- Scalability – Extract data from thousands of products effortlessly.

By using Real Data API, you can bypass scraping challenges and focus on data analysis instead.

Conclusion

BigBasket Data Scraping Using Python is a powerful technique to collect valuable insights from BigBasket’s platform. By following the steps outlined in this guide, you can scrape data from BigBasket with Python efficiently and use it for various business applications.

If you're looking for an automated solution or need assistance with web scraping, check out our Real Data API for hassle-free data extraction.