Introduction

In today's data-driven world, web crawling has become an indispensable technique for gathering information from the vast expanse of the internet. Whether it's for market research, price comparison, or data analysis, businesses and researchers rely on web crawling tools and frameworks to extract valuable insights from websites efficiently. In this comprehensive guide, we'll delve into the top open-source web crawling tools and frameworks available in 2024, examining their features, advantages, limitations, and use cases.

Why Use Web Crawling Frameworks and Tools?

Web crawling frameworks and tools are indispensable in today's data-driven world for several compelling reasons. These tools facilitate the extraction of valuable information from vast online sources, enabling businesses and researchers to leverage data for various applications. Here are some key reasons to use web crawling frameworks and tools in 2024:

Efficient Data Extraction:

Web crawling tools automate the process of data extraction from websites, making it quicker and more efficient than manual methods. These tools can handle large volumes of data and extract information with high precision, which is essential for tasks like market research and competitive analysis.

Real-Time Data Access:

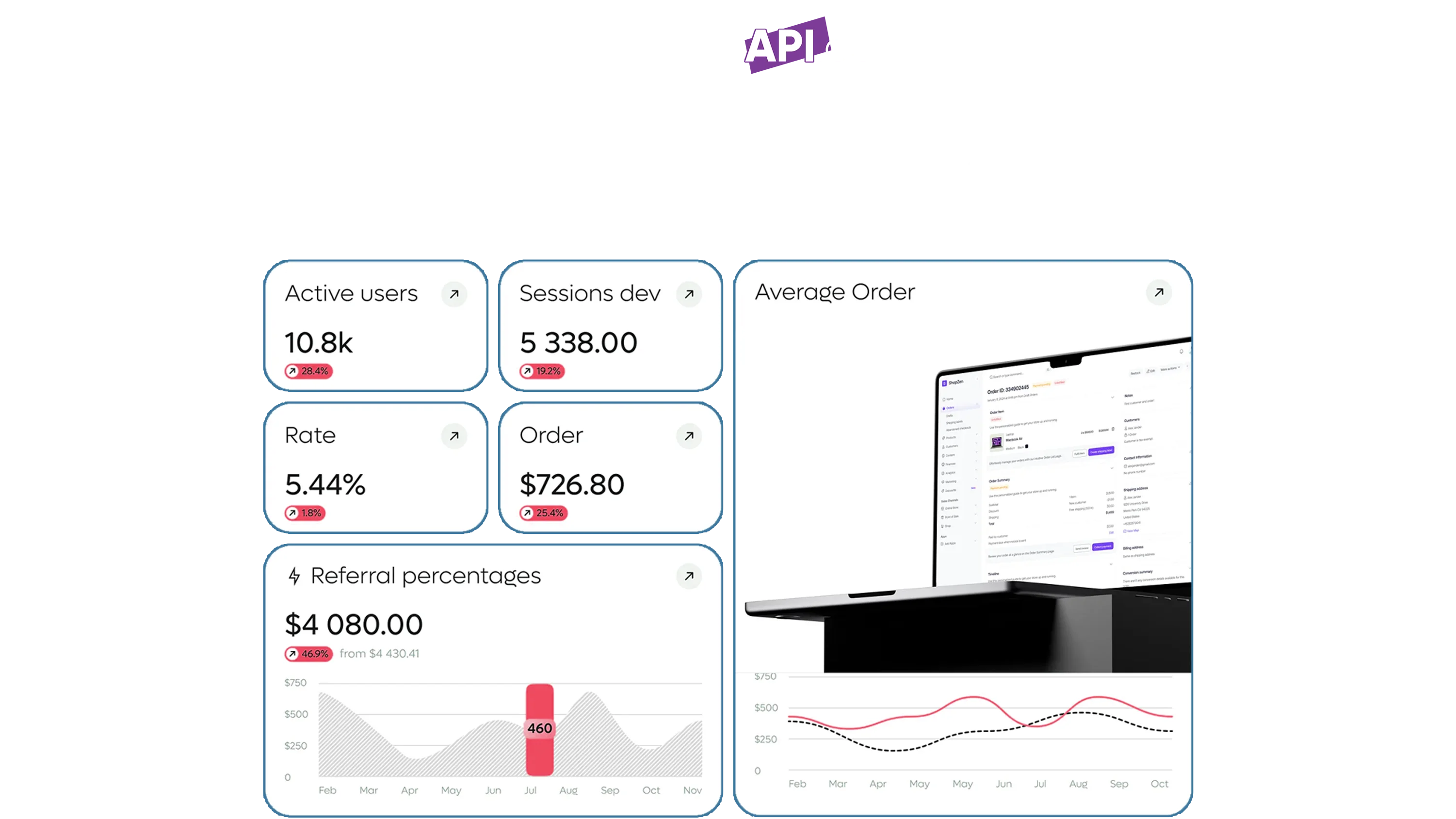

Web crawling solutions provide instant data scraping capabilities, allowing businesses to access real-time information. This is crucial for price comparison websites that need up-to-date pricing from multiple e-commerce platforms to offer accurate comparisons to consumers.

Scalability:

Modern web crawling frameworks, such as Scrapy and Apache Nutch, are designed to handle large-scale data crawling services. They support distributed crawling, which allows the processing of massive datasets across multiple servers, ensuring scalability and reliability.

Customization and Flexibility:

Web crawling tools in 2024 offer extensive customization options. Developers can tailor these tools to specific needs, such as targeting particular data points on a webpage or implementing custom parsing rules. This flexibility is vital for niche applications like sentiment analysis or content aggregation.

Cost-Effectiveness:

Automating data extraction with web crawling tools reduces the need for manual data collection, saving time and resources. This cost-effectiveness is particularly beneficial for small businesses and startups that require comprehensive data for market research without significant investment.

Enhanced Data Accuracy:

Web scraping services ensure high data accuracy by minimizing human errors in data collection. They can consistently retrieve data from structured formats, making it easier to analyze and integrate into business intelligence systems.

Competitive Advantage:

Utilizing advanced web crawling frameworks allows businesses to stay ahead of competitors by providing timely and relevant insights. For example, e-commerce companies can use data crawling services to monitor competitors' pricing and adjust their strategies accordingly.

Best Web Crawling Frameworks and Tools of 2024

In 2024, the best web crawling frameworks and tools include Apache Nutch, Heritrix, StormCrawler, Apify SDK, NodeCrawler, Scrapy, Node SimpleCrawler, and HTTrack. Apache Nutch offers scalability and integration with Hadoop, while Heritrix excels in web archiving. StormCrawler provides real-time data extraction capabilities, and Apify SDK is ideal for JavaScript-based scraping. NodeCrawler and Node SimpleCrawler are lightweight and perfect for small to medium projects. Scrapy, a Python-based framework, is known for its powerful scraping and data processing capabilities, and HTTrack allows users to download entire websites for offline access. These tools cater to various web crawling needs efficiently. Let’s go through all these tools in detail:

Apache Nutch

Apache Nutch is a powerful, scalable web crawling framework written in Java, ideal for building custom search engines and large-scale web scraping projects. It features a flexible plugin architecture, allowing for extensive customization and extension. Apache Nutch integrates seamlessly with Apache Hadoop, enabling distributed processing for handling massive datasets. Additionally, it supports integration with search engines like Apache Solr and Elasticsearch for robust indexing and search capabilities. While it offers advanced features for experienced developers, Nutch has a steeper learning curve and requires Java expertise for effective customization and optimization. Perfect for complex, high-volume web crawling and indexing tasks.

Pros:

- Scalable and extensible web crawling framework written in Java.

- Provides a flexible plugin architecture for customizing and extending functionality.

- Supports distributed crawling and indexing using Apache Hadoop.

- Integrates with popular search engines like Apache Solr and Elasticsearch for indexing and search capabilities.

- Actively maintained by the Apache Software Foundation.

Cons:

- Steeper learning curve compared to some other web crawling tools.

- Requires Java development expertise for customization and troubleshooting.

Use Cases:

- Building custom search engines and web archives.

- Harvesting data for academic research and digital libraries.

- Monitoring online forums and social media platforms for sentiment analysis.

Heritrix

Heritrix is a high-performance web crawling framework developed by the Internet Archive, specifically designed for web archiving and content preservation. Written in Java, it provides comprehensive configuration options for precise control over crawling behavior and robust handling of various content types, including HTML, images, and documents. Heritrix excels in archiving historical snapshots of websites, making it invaluable for academic research and historical preservation. While it offers extensive capabilities for large-scale crawling, Heritrix requires significant resources and expertise in Java for effective use. It is ideal for organizations focused on preserving web content for long-term access and analysis.

Pros:

- Scalable web crawling framework developed by the Internet Archive.

- Specifically designed for archival and preservation of web content.

- Supports comprehensive configuration options for fine-tuning crawling behavior.

- Provides robust handling of various content types, including HTML, images, and documents.

- Offers extensive documentation and community support.

Cons:

- Primarily focused on web archiving use cases, may not be suitable for general-purpose web crawling.

- Requires significant resources and infrastructure for large-scale crawling operations.

Use Cases:

- Archiving and preserving historical snapshots of websites and online content.

- Researching changes in web content over time for academic or historical purposes.

- Creating curated collections of web resources for educational or reference purposes.

StormCrawler

StormCrawler is an open-source, scalable web crawling framework built on Apache Storm, designed for real-time, large-scale web crawling and processing. It offers fault-tolerant, distributed crawling capabilities, making it ideal for handling massive datasets and continuous data streams. StormCrawler supports integration with Apache Kafka for efficient message queuing and event-driven architecture. Its modular design allows developers to customize and extend functionalities easily. Although it requires familiarity with Apache Storm and distributed computing concepts, StormCrawler excels in scenarios needing real-time data extraction, such as news monitoring, content aggregation, and competitive intelligence, providing a powerful solution for dynamic web crawling needs.

Pros:

- Scalable web crawling framework built on top of Apache Storm.

- Provides fault-tolerant and distributed processing capabilities for large-scale crawling tasks.

- Supports integration with Apache Kafka for message queuing and event-driven architecture.

- Offers a modular architecture with reusable components for crawling, parsing, and processing.

- Actively maintained and updated by a community of contributors.

Cons:

- Requires familiarity with Apache Storm and distributed computing concepts.

- Configuration and setup may be more complex compared to standalone web crawling tools.

Use Cases:

- Real-time monitoring of news websites and social media feeds for breaking updates.

- Aggregating data from multiple sources for content curation and analysis.

- Building custom search engines and recommendation systems based on real-time web data.

Apify SDK

Apify SDK is a versatile web scraping and automation toolkit designed for JavaScript and TypeScript developers. It simplifies the creation of scalable web scrapers and automation tasks using headless browsers like Puppeteer and Playwright. The SDK offers powerful features, including parallel crawling, request queue management, and robust error handling, making it suitable for complex scraping projects. With built-in support for cloud-based execution and integration with the Apify platform, it allows for seamless deployment and management of scraping tasks. Ideal for e-commerce monitoring, lead generation, and data aggregation, Apify SDK is perfect for developers seeking a comprehensive and user-friendly scraping solution.

Pros:

- Comprehensive web scraping and automation platform with a user-friendly SDK.

- Allows users to write web scraping scripts using JavaScript or TypeScript.

- Offers built-in support for headless browser automation using Puppeteer or Playwright.

- Provides a marketplace for reusable scraping actors covering various websites and use cases.

- Supports cloud-based scheduling, monitoring, and execution of scraping tasks.

Cons:

- Limited to the features and capabilities provided by the Apify platform.

- May incur costs for usage beyond the free tier or for accessing premium features.

Use Cases:

- E-commerce price monitoring and product data extraction.

- Lead generation and contact information scraping from business directories and social networks.

- Content aggregation and scraping of articles, images, and videos from news websites and blogs.

NodeCrawler

NodeCrawler is a lightweight and efficient web crawling library for Node.js, designed to simplify the process of extracting data from websites. It provides an easy-to-use API for defining and executing web scraping tasks, supporting concurrent and asynchronous crawling. NodeCrawler is ideal for small to medium-sized projects, offering basic features for handling URLs, request headers, and response parsing. While it lacks advanced capabilities like distributed crawling, its simplicity and integration with the Node.js ecosystem make it perfect for prototyping, automating repetitive tasks, and scraping data from relatively straightforward websites, making it a go-to choice for developers needing a quick and efficient solution.

Pros:

- Lightweight and easy-to-use web crawling library for Node.js applications.

- Provides a simple API for defining and executing web crawling tasks.

- Supports concurrent and asynchronous crawling of multiple URLs.

- Integrates seamlessly with Node.js ecosystem and third-party modules.

- Well-suited for small-scale crawling tasks and prototyping.

Cons:

- Limited scalability and performance compared to more robust frameworks.

- May not offer advanced features like distributed crawling or content extraction.

Use Cases:

- Extracting data from small to medium-sized websites for research or analysis.

- Automating repetitive tasks like link checking or content scraping in web applications.

- Building custom web scraping solutions within Node.js projects.

Scrapy

Scrapy is a robust and versatile web crawling framework for Python, designed to simplify web scraping tasks. It provides a high-level API for defining spiders, handling requests, and parsing responses, enabling efficient and scalable data extraction. Scrapy supports asynchronous and concurrent crawling, making it suitable for large-scale projects. It integrates well with popular data processing libraries like Pandas and Matplotlib, enhancing its capabilities for data analysis. While it has a steeper learning curve and requires Python proficiency, Scrapy's extensive features and active community support make it a top choice for developers seeking a powerful and flexible web scraping framework.

Pros:

- Powerful and extensible web scraping framework for Python developers.

- Provides a high-level API for defining web scraping spiders and pipelines.

- Supports asynchronous and concurrent crawling of multiple websites.

- Offers built-in support for handling requests, responses, and session management.

- Integrates with popular data analysis and visualization libraries like Pandas and Matplotlib.

Cons:

- Requires knowledge of Python programming language for customization and extension.

- Steeper learning curve for beginners compared to simpler scraping libraries.

Use Cases:

- Market research and competitor analysis using data extracted from e-commerce websites.

- Price comparison and monitoring of product prices across multiple online retailers.

- Scraping news articles, blog posts, and social media content for content aggregation and analysis.

Node SimpleCrawler

Node SimpleCrawler is an easy-to-use web crawling library for Node.js, designed for simplicity and efficiency. It offers a straightforward API for defining and managing crawling tasks, supporting concurrent requests to enhance performance. Ideal for small to medium-sized web scraping projects, SimpleCrawler provides essential features like URL management, request customization, and basic content extraction. While it lacks advanced functionalities such as distributed crawling and complex data parsing, its lightweight nature and ease of integration with the Node.js ecosystem make it perfect for developers looking to quickly prototype or automate simple web scraping tasks without a steep learning curve.

Pros:

- Lightweight web crawling library for Node.js applications.

- Offers a simple and intuitive API for defining crawling tasks.

- Supports parallel execution of multiple crawling tasks for improved performance.

- Provides basic features for handling URLs, request headers, and response parsing.

- Suitable for small-scale scraping tasks and prototyping.

Cons:

- Limited functionality and extensibility compared to more feature-rich frameworks.

- May not offer advanced features like distributed crawling or content extraction.

Use Cases:

- Scraping data from personal blogs, portfolio websites, or online portfolios for research or analysis.

- Monitoring changes in web content or page structure for quality assurance or SEO purposes.

- Extracting metadata or links from web pages for data indexing or cataloging.

HTTrack

HTTrack is a free and open-source tool for downloading and mirroring entire websites for offline viewing. It allows users to recursively fetch web pages, including HTML, images, and other files, maintaining the site's structure and original link configuration. HTTrack offers a user-friendly interface and supports a wide range of options for customization, such as setting download limits and file type filters. While it's primarily focused on static content and may struggle with dynamic or heavily JavaScript-driven sites, HTTrack is ideal for creating offline archives, backups of websites, and accessing web content in environments with limited or no internet connectivity.

Pros:

- Free and open-source website mirroring and offline browsing tool.

- Allows users to download entire websites or specific directories for offline viewing.

- Supports recursive downloading with customizable options for depth and file types.

- Provides a user-friendly interface with graphical and command-line versions.

- Cross-platform compatibility with versions available for Windows, Linux, and macOS.

Cons:

- Limited to downloading static content and assets, does not support dynamic content or interactions.

- May encounter issues with complex or JavaScript-driven websites.

Use Cases:

- Creating local backups or archives of personal websites or online portfolios.

- Downloading educational resources, tutorials, or documentation for offline access.

- Mirroring websites for offline browsing in environments with limited or restricted internet access.

Conclusion

At Real Data API, we understand the diverse needs of businesses and researchers seeking valuable insights from the web. Whether you're a seasoned developer or a beginner, our range of open-source web crawling tools and frameworks caters to your specific requirements. With our expertise, you can leverage these tools to unlock valuable data from the internet and gain a competitive edge in your industry. Explore our solutions today and discover how Real Data API can help you extract insights to drive your business forward!